Live blog

DPO Consultancy

Welcome to our live blog

Wednesday 17 Apr - 3:48 PM

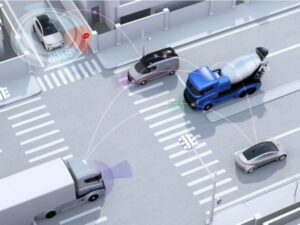

Privacy Concerns Surrounding Tracking Traffic Lights: An Urgent Call for Action

In recent years, the deployment of “tracking traffic lights” in the Netherlands has raised significant privacy concerns among both policymakers and citizens. These innovative traffic lights, designed to communicate with mobile phones of road users, have the capability to gather vast amounts of personal data, prompting intervention from the Dutch Data Protection Authority (AP). With the AP sounding the alarm once again, it is imperative for the Ministry of Infrastructure and Water Management (IenW) to take decisive action to address these privacy risks.

The concept behind tracking traffic lights is simple: they are utilized to measure traffic flow by connecting with the apps on road users’ mobile phones. However, what sets them apart from traditional detection loops is their ability to collect personal data, raising serious questions about privacy infringement. Unbeknownst to many, these traffic lights establish contact with mobile phone apps, enabling them to gather a wealth of personal information. From tracking complete travel routes, including date, time, and speed, to enabling road authorities to monitor individuals’ movements, the implications for privacy are profound.

One of the most troubling aspects is the apparent lack of consideration given by road authorities to the privacy risks associated with these tracking devices. Furthermore, there is often a lack of clarity regarding the sharing and responsibility of collected data, as mandated by the GDPR. These oversights underscore the urgent need for thorough assessment and regulation of tracking traffic lights to ensure compliance with data protection laws and safeguard individuals’ privacy rights.

The AP’s renewed call for action underscores the gravity of the situation. Having previously alerted the Ministry of IenW to the risks associated with tracking traffic lights in 2021, the AP’s recent communication emphasizes the need for immediate investigation into the design and usage of these devices to ascertain their compliance with the GDPR. Additionally, the AP urges the Ministry to engage in dialogue with all road authorities involved to address and mitigate the identified privacy risks effectively.

As concerns surrounding privacy continue to mount in an increasingly digitized world, it is incumbent upon regulatory bodies and policymakers to prioritize the protection of individuals’ personal data. In the case of tracking traffic lights, proactive measures must be taken to strike a balance between traffic management objectives and privacy considerations, ensuring that innovation does not come at the expense of fundamental rights. Only through collaborative efforts between governmental bodies, regulatory agencies, and stakeholders can effective solutions be devised to address the pressing privacy challenges posed by emerging technologies like tracking traffic lights.

Source: https://autoriteitpersoonsgegevens.nl/actueel/zorgen-ap-om-volgverkeerslichten

Monday 8 Apr - 11:36 AM

🚨BREAKING NEWS: US unveils new draft federal privacy bill

The American Privacy Rights Act (“APRA”) has been unveiled. This comprehensive draft legislation sets clear, national data privacy rights and protections for Americans, eliminates the existing patchwork of state data privacy laws and establishes robust enforcement mechanisms to hold violators accountable, including the private right of action for individuals.

One of Senators involved has been quoted as saying, “what we see is a patchwork of state laws developing, and this draft that Sen. Cantwell and I have agreed to will establish privacy protections that are stronger than any state law on the books.”

The APRA incorporates parts of other state laws, including California, Illinois and Washington and establishes foundational uniform national data privacy rights for Americans while also providing Americans the ability to enforce their data privacy rights.

While the timing of this proposed draft legislation is unclear, policymakers have in recent weeks, floated a number of draft bills – ranging from children’s privacy, reauthorization of Section 702 and new obligations for data brokers.

This important development for organizations will continue to be monitored by DPO Consultancy and further developments will be communicated. In the event your organization has any questions, please contact us, the Experts in Data Privacy at info@dpoconsultancy.nl for assistance.

Sources:

https://iapp.org/news/a/new-draft-bipartisan-us-federal-privacy-bill-unveiled/

Wednesday 3 Apr - 5:06 PM

Employee Monitoring – Italian DPA fines companies for using facial recognition in employee attendance control

Monitoring at work encompasses a broad range of activities employers engage in to oversee the performance and conduct of their employees during work hours, and regardless of the work location. The use of diverse technologies for monitoring, including camera surveillance, email monitoring systems, keystroke logging, tracking of internet activity, GPS tracking, biometric systems, and productivity tracking software, reflects the evolving nature of workplace oversight. While technology advances, adherence to data protection laws to ensure lawful and fair monitoring remains paramount.

The GDPR allows the monitoring of employees, as long as it complies with specific data protection requirements. A recent ruling by the Italian Data Protection Authority (“Garante”) showcases an instance of employee monitoring that breached data protection and privacy rules.

The Garante has sanctioned five companies with fines ranging from 2,000 to 70,000 euros for the unauthorized use of biometric data through facial recognition to monitor employee attendance. This practice was deemed a violation of employee privacy rights, as the GDPR does not allow the use of biometric data, which constitutes special category of personal data, for such purposes without a legal exception under Article 9 of the GDPR. The sanctions were issued against companies operating at the same waste disposal site after the Garante received complaints from numerous employees.

The Garante’s investigation revealed the companies’ failure to comply with both national and EU laws regarding employee rights and data protection. Notably, it was discovered that three of the companies had shared a biometric detection system for over a year without implementing necessary technical and security measures. This same system, found to be illegal, was also used across nine additional offices by one of the penalized companies. Moreover, the companies failed to provide employees with comprehensive information about the data collection, which violates the transparency principle, or conduct the mandatory data protection impact assessment.

The Garante underlined that the companies should have considered less intrusive methods of tracking employee attendance, such as a badge. As part of the investigation, the DPA has also mandated the deletion of all illegally collected data, emphasizing the need for adherence to privacy laws and the protection of employee rights against invasive monitoring technologies.

If your organization has questions about the privacy implications of employee monitoring, contact us at info@dpoconsultancy.nl for assistance.

Source: https://www.gpdp.it/home/docweb/-/docweb-display/docweb/9997208#1

Friday 15 Mar - 4:34 PM

The AI Act and the GDPR: what does it mean for companies?

What is the EU AI Act and what does it mean for companies who use AI Solutions?

On the 13th of March 2024, the AI Act passed the scrutiny of the European Parliament and is ready to become a law of the Union. This comprehensive regulatory framework aims to govern the development and use of artificial intelligence (AI) across the European Union (EU).

The AI Act’s primary aim is to ensure that AI technologies are developed and used in a manner that is ethical, transparent, and respects fundamental rights, and covers a wide range of AI systems used in various sectors, including healthcare, transport, and finance.

In particular,

- it imposes that high-risk AI systems will be subject to strict regulatory requirements (such as those used in critical infrastructure, law enforcement, and employment)

- prohibits certain AI practices that pose significant risks to individuals or society such as human behaviour manipulation, the exploitation of vulnerabilities, or social scoring

- non-compliant organizations may face significant fines of up to €30 million or 6% of their global annual turnover, whichever is higher

- it introduces a conformity test for AI systems. This assessment is designed to foster accountability and only applies to AI systems classified as ‘high-risk’

Where does the AI Act Apply? Also outside the EU?

Like the GDPR, the AI Act carries significant extraterritorial implications. It extends its jurisdiction to providers who introduce AI systems in the EU market, regardless of their geographical location. Additionally, it encompasses providers or deployers situated outside the European Union whose AI systems are utilized within the EU.

Significant exclusions from the AI Act encompass AI systems regarding scientific research and development purposes are in place. Furthermore, the Act exempts research, testing, and development activities related to AI before market placement or deployment, excluding real-world testing.

When will the AI Act come into force?

The Act is currently undergoing a final review by lawyer-linguists and is anticipated to be formally adopted before the conclusion of the legislative session. Additionally, the law necessitates formal endorsement by the Council.

Upon publication in the Official Journal, the regulation will become effective after twenty days. It will become fully enforceable twenty-four months after it enters into force, with certain exceptions:

- prohibitions on restricted practices will take effect six months following the entry into force;

- codes of practice will be applicable nine months after entry into force;

- general-purpose AI regulations, including governance, will come into effect twelve months after entry into force;

- obligations for high-risk systems will be enforced thirty-six months after entry into force.

The AI Act and the GDPR: how to efficiently combine these regulations?

The AI Act does not affect or amend the GDPR or the ePrivacy Directive. However, the deployment of AI solutions must not interfere with GDPR principles, because these activities still involve the processing of personal data. These are some examples.

- Record of Processing Activities (RoPA): AI-related processes are processing activities that must be mapped in this important document.

- Privacy Impact Assessment (PIA) and/or Data Protection Impact Assessment (DPIA): a new processing activity with AI technology usually requires one of these risk assessment procedures.

- Technical and Organizational Security Measures (and other accountability measures): new policies (i.e. a comprehensive policy on AI use), procedures, and assessments (such as the Fundamental Rights Impact Assessment for high-risk AI systems or the conformity test for AI systems) are important to guarantee a safe and GDPR-compliant use of AI technology.

We are well-equipped and ready to help you handle the interactions between AI Governance and Data privacy! If you want to include AI compliance in your privacy journey, please contact us at info@dpoconsultancy.nl.

Wednesday 13 Mar - 10:36 AM

EDPS finds European Commission’s use of Microsoft 365 infringes EU data protection law

After its inquiry, the European Data Protection Supervisor (EDPS) found that the European Commission breached numerous essential data protection rules while using Microsoft 365. As a consequence, the EDPS has mandated that the Commission implement specific corrective actions.

The EDPS discovered that the Commission breached several aspects of the EU Regulation 2018/1725, relating to EU data protection rules for EU institutions and entities, notably those regarding data transfers outside the European Economic Area (EEA). Specifically, it did not ensure that transferred personal data received equivalent protection as within the EEA. Additionally, the Commission’s contract with Microsoft lacked detailed specifications on the collection and intended use of personal data via Microsoft 365. The Commission’s breaches involved inadequate data processing carried out on its behalf, including transfers of personal data.

As a result, the EDPS has mandated that starting 9 December 2024, the Commission must halt all data transfers from its use of Microsoft 365 to Microsoft and its related entities in non-EEA countries lacking adequacy decisions. Additionally, it’s required that the Commission align its Microsoft 365-related data handling practices with Regulation 2018/1725, ensuring all operations are compliant by the specified date.

In its press release, the EDPS notes that it recognizes the importance of not hindering the Commission’s ability to carry out its tasks in the public interest or to exercise official authority vested in the Commission while providing sufficient time for it to adjust data flows and align its data processing practices with the Regulation 2018/1725 requirements.

Background information

In May 2021, the EDPS launched an investigation into the Commission’s usage of Microsoft 365, following the Schrems II decision. The purpose was to assess whether the Commission adhered to the EDPS’s earlier recommendations concerning Microsoft’s products and services within EU institutions. This inquiry is a component of the EDPS’s involvement in the 2022 Coordinated Enforcement Action led by the EDPB.

If your organization has questions about international data transfers, contact us at info@dpoconsultancy.nl for assistance.

Thursday 29 Feb - 2:11 PM

HIPAA: Safeguarding Health Data in the Data Protection Landscape

In the era where data breaches are not just a possibility but also an unavoidable threat, the Health Insurance Portability and Accountability Act (HIPAA) positions as a ray of hope and security for the healthcare industry. HIPAA is more than just a regulatory requirement.

Since its implementation in 1996, HIPAA has come to be associated with safeguarding private patient health information, adapting over the years to address the challenges and opportunities presented by the digital age.

The 3 rules of HIPAA are:

- HIPAA privacy rule,

- HIPAA security rule, and the

- HIPAA breach notification rule.

The Privacy Rule and the Security Rule are the cornerstones of HIPAA to ensure the confidentiality, integrity and availability of Protected Health Information (PHI), which is the main concern of the regulation.

The information protected by HIPAA

The HIPAA Privacy Rule establishes national standards for the protection of PHI by covered entities and their business associates. The significance of patients’ rights to their health information is emphasized. In particular, the ability to see, receive a copy of, and request corrections from their medical records. PHI may only be used and disclosed for treatment, payment, and healthcare operations, and for no other reason without the consent of the patient, according to the rule.

On the other hand, the Security Rule sets standards for protecting PHI that is held or transferred in electronic form. It describes technical, administrative, and physical security measures to guarantee the security of electronic PHI (ePHI). This is to prevent unwanted access or breaches, this comprises safeguards including transmission security, integrity controls, audit controls, and access controls.

HIPAA and digital health

As healthcare evolves with the integration of digital health technologies, HIPAA has become more important than ever. These technologies bring new concerns for data security while also increasing accessibility and efficiency in the delivery of healthcare. HIPAA’s requirement for regular risk assessments aids in identifying weaknesses and putting in place suitable security measures.

Achieving HIPAA compliance is not just a regulatory requirement but a commitment to patient privacy and trust. It entails an ongoing process of staff training, privacy awareness-building, and security measure evaluation and improvement inside healthcare companies.

The Future of HIPAA Compliance

The journey of HIPAA compliance is incomplete, with future amendments expected to address emerging technologies and privacy challenges. In order to address the need for the protection of patients’ rights and the privacy of their medical records in the digital age, this dynamic framework will continue to influence the state of healthcare data protection.

If you want to learn more about navigating HIPAA, you can read our whitepaper about HIPAA or contact us at info@dpoconsultancy.nl

Wednesday 21 Feb - 3:40 PM

Embracing the Google Consent Mode V2

The digital advertising landscape has seen significant changes due to the enforcement of various regulations affecting user consent and the handling, security and use of personal data. Notably, the Digital Markets Act (DMA) (March 2024) imposes new requirements on major companies like Google, Amazon, Meta and Microsoft, designating them as ‘gatekeepers’. As the same time, the legislation clearly puts an obligation on such gatekeepers: they are responsible for obtaining user consent for their core platform services. Consequently, Google sees a clear need to implement Google Consent Mode V2 to make sure that they themselves adhere to privacy legislation.

Consent Mode, developed by Google, enables the transmission of consent signals from websites cookie banners directly to Google. This ensures that user consent preferences of the user are, in fact, honored. In practice, this tool provides a direct line of communication between the websites, where the user has given their preference to agree to share personal data, directly with Google for advertising purposes and personalization. It is an effective and efficient tool that streamlines procedures while at the same time providing users with more control regarding their personal data. When the user does opt to provide consent, Google can utilize these tools for detailed analytics. Conversely, if the user chooses not to consent, Google restricts the use of cookies and identifiers respectively.

The key enhancement lies in the addition of two new consent states: ad_user_data and ad_personalization, which reflect a user’s consent status.

- ad_user_data: whether the user consented for advertising activities, it requires a pro-active act of the user where the users must actively agree to share their data with Google via a consent banner interaction on website of respective business.

- ad_personalization governs the use of data for personalized advertising, such as remarketing. This also requires a pro-active choice to provide consent via cookie banner interaction on website of respective business.

For businesses who wish to maintain their use of Google Ads within the European Economic Area (EEA) markets, embracing or upgrading to Consent Mode v2 is simply necessary. This ensures accurate conversion tracking and efficient optimization of advertising expenditure. Failure to implement Google Consent Mode v2 by March 2024 means missing out on valuable insights. Consequently, businesses would analyze website performance and optimize Google Ads campaigns based on incomplete, incorrect and even inaccurate data, which evidently demonstrates a problem for businesses relying on such information. Moreover, without Consent Mode v2, advertisers won’t be able to add new EEA users to their target lists post-March 2024. This severely impacts the effectiveness of remarketing and engagement campaigns, as well as all metrics pertaining to EEA users. Therefore, lacking Consent Mode v2 renders measurement, reporting, audience list management, and remarketing efforts in the EEA largely ineffective.

To conclude, to continue to enjoy the full workings of Google Ads, it is necessary to embrace the Google Consent Mode V2. For more information, do not hesitate to contact us at info@dpoconsultancy.nl.

Source: Google announces Consent Mode v2 – What does it mean? (cookieinformation.com)

Tuesday 13 Feb - 11:28 AM

Not answering to DSAR causes serious fines even if the Information is Easily Accessible Online

Data Subject Access Requests (DSAR) from Employees: not answering causes Serious Fines even if the Information is Easily Accessible Online

In a recent development, the Italian DPA has taken decisive actions against Autostrade per l’Italia and Amazon Italia, fining them €100,000 and €40,000 respectively for having mishandled Data Subjects Access Requests (DSARs) from (former)employees. Article 15 GDPR outlines the Data Subject’s right to access, and its pivotal role has also been acknowledged by the European Data Protection Board (EDPB) guidelines 01/2022 on the right of access as updated on the 28th of March 2023. In particular, this right allows individuals to confirm the processing of their data, access personal information, and obtain details about the processing, including

- purposes,

- categories of data,

- recipients,

- storage period.

The EDPB emphasizes the interconnectedness of the right of access with other GDPR provisions and underscores that limitations should only be placed by other GDPR Articles (such as Articles 15(4) and 12(5), aimed at preventing infringement on others’ rights and addressing manifestly unfounded or excessive requests).

Employees Request for Access to Work-related Information (Autostrade per l’Italia)

Autostrade faced complaints from 50 employees. They requested access to their:

- personal files,

- pay slips,

- information relating to the processing of data for the calculation of their pay slips,

without receiving any reply. Autostrade argued that it did not reply for the following reasons:

- To safeguard its right to defend itself in several lawsuits that involved the data subjects.

- The employees could have easily retrieved the information on an online platform.

- Employees had been informed about their privacy rights in the privacy policy according to Article 13 GDPR.

Notwithstanding these arguments, the Italian DPA stated that Autostrade should have replied to the employees’ requests anyway and informed them about the reason for the denial of access. Failing to do so, lead to a fine of €100,000.

Former Employee Request to Access to Personnel File (Amazon Italia)

A former Amazon employee requested a copy of the personnel file. Amazon did not reply to the DSAR, arguing that it was too broad and generic. After the request for information from the Italian DPA (and significantly after six months after the request), Amazon sent the employee a copy of the personnel file. Notwithstanding this, the Italian DPA stated that Amazon:

- should have replied to the employee within 30 days as provided by the GDPR

- should have informed the employee that more information was required to specify the request.

In other words, the fact that the DSAR was too broad and generic did not exclude the Company’s duty under the GDPR to answer the DSAR.

Conclusions

In both cases, the companies failed to provide timely and adequate responses, violating the Data Subjects’ right of access. Autostrade should have replied even if the information was easily retrievable somewhere else and Amazon should have replied by asking the Dat Subject to send a more specific request. The decision to stay silent cost the companies tens of thousands of euros of fines that could have been easily avoided.

These recent regulatory actions highlight the critical role of the right of access in ensuring individuals’ control over their personal data. The Italian DPA stresses the necessity of providing motivated responses even in denial cases, informing individuals about the right to appeal. Additionally, it emphasizes that broad and generic access requests should not excuse delayed responses; instead, companies should seek clarification promptly.

In conclusion, organizations must recognize the centrality of access rights within privacy policies, adopting a proactive approach to address requests in a timely and transparent manner. These fines underscore once again the importance of compliance and the responsibility organizations bear in upholding individuals’ privacy rights. If you want help in structuring your DSAR policy or verifying it is up to date please contact us, we are happy to help you.

Wednesday 7 Feb - 4:03 PM

The Dutch Data Protection Authority is going to monitor cookie banners more closely

This year, the Dutch Data Protection Authority (AP)plans to increase its scrutiny of cookie consent practices to ensure compliance with regulations. Practice has shown that organizations quite often make use of misleading cookie banners, such as hidden rejection buttons or requiring the consumer to go through various clicks before rejecting cookies.

Aleid Wolfsen, chairman of the Dutch DPA stated that: “With tracking software or tracking cookies, organizations can look at your internet behavior. You can’t just do that, because what you do on the internet is very personal. An organization is only allowed to keep track of that if you explicitly agree to it. And you should have the option to refuse this tracking software, without it being detrimental to you.” This clearly highlights the importance of requesting consent in the correct way; it ensures that consumers have- and remain in control over what they share and do not share.

Targeted Advertising

Websites make use of functional, analytical, and tracking cookies. These cookies frequently handle personal data, such as the consumer’s website entry point, visited websites or apps, duration spent on specific pages, clicked links, and even search queries. This facilitates organizations in crafting user profiles and delivering personalized advertisements. However, when employing these practices, organizations must adhere to the GDPR and the ePrivacy Directive.

Misleading Cookie Banners

It’s essential to retain control over personal data while browsing. A critical step in this direction is offering transparent information about cookie usage, enabling consumers to make informed consent decisions. Organizations should implement legally compliant cookie banners, steering clear of deceptive practices like concealing decline options in a separate layer of the banner (which necessitates additional clicks before the option to reject is available) or automatically selecting ‘accept’ checkboxes.

Correct Cookie Banners

The AP specifies important elements of compliant cookie banners, such as furnishing purpose information, abstaining from automatic checkmarks, employing transparent language, and offering consumers a genuine clear choice within the initial layer of the cookie banner. It emphasizes not requiring consumers to repeatedly click to decline cookies. The AP hereby seems to align with many other EU member states who have also indicated that the consumer should be presented with both the ‘accept’ and ‘reject all’ in the first layer.

Dutch Data protection Authority Investigation

If organizations don’t obtain appropriate consent for cookie banners and tracking software, the AP has the authority to examine and ensure adherence, potentially imposing fines.

For assistance in ensuring GDPR and ePrivacy Directive compliance with your organization’s cookie banner, please contact us at info@dpoconsultancy.nl.

Source: AP pakt misleidende cookiebanners aan | Autoriteit Persoonsgegevens

Wednesday 31 Jan - 3:13 PM

Dutch Data Protection Authority Initiates European Procedure on Privacy and Personalized Ads

The Dutch Data Protection Authority (Autoriteit Persoonsgegevens or AP), in collaboration with the privacy watchdogs of Norway and Germany, is set to launch a European procedure addressing privacy concerns related to personalized advertisements. The regulators aim to present a clear stance, in conjunction with their EU counterparts, on how online platforms obtain user consent for displaying personalized ads.

Some online platforms assert that users can only continue to use their services for free if they agree to the utilization of their personal data for targeted advertising. For the AP, it is crucial that privacy protection is not exclusive to those who can afford it. Privacy is deemed a fundamental right that should be equally safeguarded for everyone.

EDPB’s Timely Decision

Within 8 weeks, the European Data Protection Board (EDPB), which includes European privacy watchdogs such as the AP, will issue a position on this matter.

Aleid Wolfsen, Chair of the AP, emphasizes the significance of maintaining control over personal data, especially in the era of extensive online tracking. He questions whether privacy is becoming a luxury reserved for the affluent and expects the EDPB’s forthcoming position to significantly impact how tech companies handle user privacy.

‘Pay or Okay’ Model

Online platforms are only allowed to display personalized ads with user consent, as per previous European rulings. However, some platforms employ the ‘pay or okay’ model, where users must pay a monthly fee if they do not agree to the use of their personal data for targeted ads.

The privacy authorities, working collectively within the EDPB, aim to swiftly determine whether the ‘pay or okay’ model complies with the General Data Protection Regulation (GDPR).

Free Consent

The GDPR mandates that companies processing personal data must have a legal basis, such as consent. Consent should be freely given without coercion, and individuals should have the option to refuse the processing of their personal data without facing adverse consequences.

The ‘pay or okay’ policy poses challenges, particularly concerning major online platforms with a large user base. Users might feel dependent on these platforms due to social connections or the presence of essential information and popular content.

Crucial questions include whether consent for data processing is given under duress, whether the pricing is fair, and whether refusal results in adverse consequences, especially for individuals with lower incomes. The lack of a uniform European approach among national regulators currently complicates the matter. DPO Consultancy will monitor developments in this regard and provide an update in due course.

Wednesday 24 Jan - 9:40 AM

Amazon France fined €32 million for unlawful employee monitoring

Normal 0 false false false en-NL X-NONE X-NONE

On 23 January 2024, the French Data Protection Authority (“CNIL”) published its decision, which was issued on 27 December 2023, regarding the fine it imposed upon Amazon France for numerous violations of the General Data Protection Regulation (“GDPR”) following an investigation. The fine imposed amounts to €32 million.

Background

The CNIL investigated Amazon France after press articles were published on the practices implemented by Amazon France and after receiving numerous complaints from employees.

The Amazon warehouses, which are located in France, are managed by Amazon France. As part of this, each warehouse employee is equipped with a scanner, which documents the execution of certain tasks. Every time an employee makes use of the scan function, it results in the recording of data that can be used to calculate values relating to the quality, productivity, and periods of inactivity of each employee.

Findings of the CNIL

The data collected from employees was collected in real time and all data reported was kept for 31 days. The smallest details of an employee’s productivity were available to supervisors. The CNIL determined that supervisors should rather rely on data reported in real time to identify difficulties encountered by employees and that a selection of aggregated data, which has already been collected for other data, should be sufficient. Thus, Amazon France was found to be in violation of Article 5(1)(c) GDPR as it did not process personal data in a manner that is relevant, adequate and limited to what is necessary in relation to the purposes of the processing.

Regarding the monitoring of employees, the CNIL indicated that the three methods of monitoring – when an employee scans an item too quickly, the idle time indicator indicating interruptions of 10 minutes or more and latency times of less than 10 minutes – cannot be based upon legitimate interest as the methods are excessively intrusive. Therefore, in the absence of a lawful basis, Amazon France was found to have violated Article 6 GDPR.

Numerous employees were contracted on a temporary basis and the confidentiality and privacy policy of Amazon France was not provided before the collection of their personal data. The CNIL held that the information provided to temporary workers on the company’s intranet was insufficient due to the fact that temporary workers were not requested to read it and this was not the most appropriate method of informing temporary workers who did not have access to an office computer during working hours.

Lastly, the information provided about the video surveillance was not properly communicated to employees and external visitors and the access to the video surveillance was found to be insufficiently secured as the password access was not robust and access accounts were shared between multiple employees. Regarding the characteristics of processing and risks involved, the CNIL found that Amazon France was in violation of Article 32 GDPR for failure to guarantee a level of security appropriate to the risk of processing.

To ensure that your organization does not receive a fine similar to the one discussed, contact us, the Experts in Data Privacy at info@dpoconsultancy.nl, for further assistance.

Source:

Wednesday 17 Jan - 1:08 PM

Importance of DPIAs: Dutch Authority Imposes Fine on ICS for Compliance Lapse

The Dutch Data Protection Authority (AP) has fined International Card Services B.V. (ICS) 150,000 euros for not conducting a required Data Protection Impact Assessment (DPIA), as mandated by the General Data Protection Regulation (GDPR). DPIAs are crucial for organizations to systematically identify and mitigate privacy risks associated with processing personal data.

DPIAs are essential for legal compliance, ensuring organizations meet GDPR requirements. They offer a proactive approach to privacy management, allowing organizations to identify, evaluate, and address potential risks before they escalate. This not only helps in preventing costly data breaches but also showcases an organization’s commitment to transparency, accountability, and responsible data processing.

Here are several reasons why conducting DPIAs is so important:

- Risk Identification and Mitigation:

- DPIAs help organizations systematically identify and evaluate the risks that may arise from their data processing activities. This includes assessing the likelihood and severity of potential negative impacts on individuals’ privacy.

- By recognizing and understanding these risks, organizations can implement appropriate measures to mitigate them, reducing the likelihood of data breaches, identity theft, or other privacy-related issues.

- Legal Compliance:

- GDPR requires organizations to conduct DPIAs for processing activities that are likely to result in high risks to individuals’ rights and freedoms. Failing to conduct a DPIA when required can lead to legal consequences, including fines and penalties.

- Transparency and Accountability:

- Performing DPIAs demonstrates an organization’s commitment to transparency and accountability in its data processing practices. It shows that the organization is proactively assessing and addressing potential privacy risks, promoting a culture of responsibility.

- Building Trust with Stakeholders:

- Individuals are becoming increasingly aware of the importance of privacy, and they expect organizations to handle their personal data responsibly. Conducting DPIAs helps build trust with customers, employees, and other stakeholders by showcasing a commitment to protecting their privacy.

- Proactive Privacy Management:

- DPIAs are a proactive tool for privacy management. Instead of reacting to privacy issues after they occur, organizations can anticipate and address potential problems in advance, minimizing the negative impact on individuals and the organization’s reputation.

- Avoiding Costly Data Breaches:

- Identifying and mitigating risks through DPIAs can help organizations avoid costly data breaches. The financial and reputational damage caused by a data breach can be significant, and DPIAs serve as a preventive measure to minimize such risks.

- Continuous Improvement:

- DPIAs are not a one-time activity; they contribute to a continuous improvement cycle. Organizations should regularly revisit and update DPIAs to account for changes in processing activities, technology, or regulations, ensuring ongoing compliance and effectiveness.

In summary, conducting DPIAs is a proactive and essential practice for organizations to meet legal requirements, protect individuals’ privacy, build trust, and avoid the negative consequences associated with privacy breaches. It is a foundational element of a robust privacy management framework in today’s data-driven landscape.

Does your organization need to conduct any DPIAs or do you have any questions on this topic, contact us, the Experts in Data Privacy at info@dpoconsultancy.nl for assistance.

Thursday 11 Jan - 3:10 PM

Data Controller Liability and its Limits according to the CJEU

In December 2023, the Court of Justice of the European Union (CJEU) ruled on the matter of data controller liability for processing activities carried out by its processor. In case C-683/21, the Court stated that there are limits to this. In other words, the controller-processor relationship is not by itself sufficient, if:

- the processor processes personal data for its own purposes

- the processor acts in a manner that is incompatible with the arrangements set by the controller

- it is reasonable to conclude that the controller didn’t agree to the processing

Case C-683/21 Background

During the outbreak of COVID-19, the National Public Health Centre of the Lithuanian Ministry of Health (NVSC) commissioned an app to UAB “IT sprendimai sėkmei” (UAB), an IT service provider. Finally, the app was available on Google Play, and its privacy policy referenced NVSC and the service provider as controllers. The NVSC and service provider had not concluded nor signed any contract. Eventually, the NVSC terminated the procurement of the app due to a lack of funds.

As a consequence of these events, the Lithuanian Data Protection Authority imposed administrative fines on the NVSC and UAB as joint controllers. On one hand, the decision was challenged by the NSVC on these grounds:

- it was not a controller for the processing in question

- the service provider built the app, although there was no contract between the parties

- it had not consented to or authorized it to make the app available to the public.

On the other hand, UAB claimed it was merely a processor.

CJEU decision

Firstly, the Court reaffirmed the broad scope of controllership:

- Facts over formalities: someone can be a controller event without a specific contact. On the other hand, the fact that a person is referenced as a controller in a privacy notice is not in itself sufficient to make that person a controller unless that person had consented — explicitly or implicitly — to this.

- In this case, the NVSC commissioned the app for its own objectives (COVID-19 management). In doing so, the NVSC had foreseen the data processing that would be carried out and had participated in determining the parameters of that app. Therefore, NVSC should be regarded as a controller.

- The fact the NVSC did not acquire the app and did not authorize its dissemination to the public is not relevant. It would have been different, however, if the NVSC expressly forbade UAB to make the app available to the public.

Secondly, the Court reaffirms that joint- controllership does not imply equal responsibility. The level of responsibility depends on the circumstances of the case. Moreover, formal arrangements are not necessary, and the party can be joint controllers, nevertheless. In other words, this arrangement is a consequence of the parties being joint controllers, not a pre-condition for the existence of joint control.

Controller Wrongful Behaviour and Liability of a Controller for Acts of the Processor

In conclusion, the Court ruled that:

- according to Article 83 GDPR, a controller can receive an administrative fine only for an intentional or negligent infringement of the GDPR (i.e. wrongful behaviour). On the other hand, the Court also confirmed the fined controller may not be aware of the GDPR infringement

- according to Recital 74 GDPR, the controller is responsible for processing carried out on its behalf by the processor

- the controller would not be liable in the following situations because in these cases the processor would become a controller (Article 28(10) GDPR):

- where the processor has acted for its own purposes

- where the processor has processed data in a manner that is incompatible with the arrangements for the processing set by the controller

- where it cannot be reasonably considered that the controller consented to such processing

Source: https://iapp.org/news/a/the-cjeu-rules-on-the-liability-of-controllers/

Thursday 4 Jan - 3:56 PM

The need for a harmonious approach: rejecting cookies should be as easy as accepting them

Numerous websites utilize cookies, which are generally divided into ‘essential’ (functioning website) and ‘non-essential’ (for example, store important information and user preferences) categories. The regulation of cookies falls under the ePrivacy Directive, translated into national laws of EU Member States. The ePrivacy Directive mandates that websites offer transparent information about cookie usage and seek consent for placing non-essential cookies.

Currently, there are different approaches within the European Union (EU) with regards to accepting and denying consent for the placement of cookies. The first approach includes both “Accept All” and “Reject All” options displayed in the initial layer of a cookie consent management solution. The second approach features only the “Accept All” option in the first layer, accompanied by a link to the second layer of the cookie consent management solution where the visitor can reject the use of non-essential cookies.[1]

The prevailing approach seems to lean towards the first method: an “accept all” and “reject all” in the first layer. Specifically, the Belgian, Austrian and Spanish data protection authorities are in favor of presenting both options in the first layer, which has even been enforced by the Franch data protection authority.[2] The German[3] data protection authorities do not require a “reject all” when, for example, the consent option is also not displayed in the first layer. The Irish[4] data protection authority indicates that it is sufficient for there to be a consent button in the first layer and a link to further and more detailed information in the second layer.

Having to click various times to reject the non-essential cookies is, by those who view the first approach as GDPR compliant, viewed as a harmful nudge technique that reduces control over personal data and discourages a visitor from rejecting consent yet steers the visitor to provide consent (as it is easier and does not require much effort), it is a clear influencing technique. Though the GDPR and the ePrivacy Directive do not explicitly dictate that rejecting consent should be as easy as consenting, it is argued that this can, in fact, be deducted implicitly from the GDPR. The GDPR dictates that consent must be freely given, informed, specific and unambiguous.[5] The data protection authorities in favor of the first approach question whether requiring the visitor to conduct multiple clicks to be able to reject consent aligns with the GDPR consent requirements and, as such, pulls into doubt whether the method through which consent is asked, is valid. Specifically, is consent truly freely given while keeping in mind the nudging technique used to steer the consumer in a certain direction. Additionally, the GDPR includes the fairness principle: the same data protection authorities find that that offering an equal and same way of rejecting cookies as opposed to consent to cookies, is fair.

Also within the Netherlands, this is a hot topic. Minister Alexandra van Huffelen of Digital Affairs has written a letter to the Second Chamber. In her letter, she informs the Second Chamber that often websites use dark patterns or nudging techniques to influence the choice of the visitor, the example of the difficulty of rejecting non-essential cookies.[6]

The Dutch Authority for Consumers and Markets (ACM) is the supervisor of the Dutch Telecommunication Act (implementation of the ePrivacy Directive) and the Dutch Data Protection Authority (DPA) is the supervisor of the GDPR, who can both regulate such matters. The CJEU has already ruled that consent cannot be given by a pre-tickets box, rendering such consent invalid.[7] Under the European Data Protection Board (EDPB) a Cookie Banner Task Force has been established. In their January 2023 report, they discuss how different practices, such as dark patterns, relate to the ePrivacy Directive and the GDPR.[8] The EDPB also issued a guideline on this dark patterns on social media, potentially also relevant.[9]

Evidently, efforts at European level are being made to provide more clarity on this issue. Clear though is that the majority of the Member States find that both a “accept all cookies” and “reject non-essential cookies” in the first layer of the cookie banner is the GDPR compliant route. Important is that the approach concerning the rejection or acceptance of consent is streamlined and made harmonious across the European Union thereby enhancing consumer protection and control over personal data.

[1] “Reject All” button in cookie consent banners – An update from the UK and the EU | Technology Law Dispatch

[2] Belgium Checklist on Cookies; Austrian Guidance on Cookies; Spanish Guidance on Cookies; CNIL on refusal and acceptance of cookies

[3] German Guidance on Cookies

[4] Irish Guidance Note: Cookies and other tracking technologies

[5] Article 6 GDPR.

[6] Letter minister Alexandra van Huffelen of Digital Affairs concerning cookies to the Second Chamber

[7] CJEU 1 October 2019, C-673/17, ECLI:EU:C:2019:801

[8] Report cookie banner taskforce | European Data Protection Board (Europa.eu)

[9] Guidelines 03/2022 on deceptive design patterns in social media platform interfaces: how to recognize and avoid them | European Data Protection Board (Europa.eu)

Tuesday 2 Jan - 10:13 AM

Landmark CJEU Ruling: SCHUFA Case Redefines Automated Decision-Making Responsibilities

The recent judgment (C-634/21) by the Court of Justice of the European Union (CJEU) in the SCHUFA case has significant ramifications, particularly for entities engaged in automated decision-making processes. The case specifically addresses credit reference agencies, establishing that when creating credit repayment probability scores, these agencies are involved in automated individual decision-making. This responsibility extends to both the credit reference agency and the lenders relying on these scores, placing them under the purview of Article 22 of the GDPR.

Article 22 of the GDPR restricts automated individual decision-making, allowing it only under specific circumstances such as contractual necessity, legal justifications, or explicit consent. The CJEU’s ruling emphasizes the need for robust safeguards when engaging in such automated decision-making processes, including the provision for human intervention, the right to express views, and mechanisms to challenge decisions. While the decision pertains to credit reference agencies, its broader implications are felt across industries employing predictive AI tools and automated decision-making services.

Simultaneously, the CJEU, on the same day, addressed insolvency data retention in cases C-26/22 and 64/22. The cases involved individuals (UF and AB) who underwent insolvency proceedings in Germany, seeking the deletion of their data retained by SCHUFA, a credit reference agency. The CJEU ruled on the duration of data retention, emphasizing that German law, which allows public information on insolvencies to be published for six months, should take precedence over private sector interests.

Moreover, the decision underscored the right to erasure under Article 17 of the GDPR, asserting that it applies when personal data has been unlawfully processed. The right to object under Article 21 was reiterated, with the CJEU emphasizing the need for controllers to cease processing personal data if a data subject objects, unless compelling legitimate grounds override the data subject’s interests.

Importantly, the CJEU affirmed individuals’ right to a full judicial review of decisions made by Data Protection Authorities (DPAs). This rejects a more limited interpretation and ensures that data subjects have a comprehensive mechanism to challenge decisions related to their data.

In conclusion, these CJEU rulings accentuate the critical importance of transparency, accountability, and robust data protection measures in the evolving landscape of automated decision-making and data retention. Organizations are urged to align their practices with these legal developments to ensure compliance and safeguard individuals’ rights in the digital age.

Does your organization have questions about automated decision-making? Contact us, the Experts in Data Privacy at info@dpoconsultancy.nl for assistance.

Source: https://iapp.org/news/a/key-takeaways-from-the-cjeus-recent-automated-decision-making-rulings/

Thursday 21 Dec - 10:47 AM

Fear over possible misuse of personal data resulting from a data breach regarded as non-material damage

The Court of Justice of the European Union (“CJEU”) has ruled that the fear of a data subject over the possible misuse of their personal data from a data breach is regarded as non-material damage and can lead to financial compensation from the data controller.

Facts:

The Bulgarian Tax Authority – the data controller – suffered a data breach and as a result, more than 6 million data subjects’ personal data was leaked online. The complainant was one of the 6 million data subjects affected.

The complainant instituted legal proceedings against the data controller under Article 82 General Data Protection Regulation (“GDPR”). Part of her claim included approximately €510 as compensation for the non-material damage resulting from the data breach. She argued that the data controller had caused the damage due to their failure to implement adequate technical and organizational measures in breach of Articles 5, 24 and 32 GDPR. Her non-material damage was the fear that her personal data might be misused in the future and that she could be threatened as a consequence.

Her claims were dismissed as the court held that the data controller had not caused the data breach as it caused by the actions of third parties and that the complainant had failed to prove the data controller failed to implement security measures. Furthermore, the court was of the opinion that the complainant had not suffered any non-material damage as her fear was only hypothetical.

The complaint appealed this decision and various questions were referred to the CJEU by the appellate court.

Finding:

The CJEU held that the burden of proof for proving that technical and organizational measures are adequate lies with the data controller and therefore granted the complainant damages for the data breach.

The fact that a third party breaches a data controller does not automatically mean the technical and organizational measures of the data controller were inadequate. The CJEU further held that Articles 24 and 32 GDPR merely requires the data controller to implement technical and organizational measures in order to avoid any personal data breach, if at all possible. It cannot be inferred from the language of the GDPR that a breach is sufficient to conclude that the measures were not appropriate, without allowing the data controller to argue otherwise.

The appropriateness of technical and organizational measures and expert reports regarding this must be assessed by national courts. This must be assessed in two stages, firstly, the court must identify the risks of a breach and the potential consequences of those risks and secondly, the court must determine whether the data controller’s technical and organizational measures are appropriate to the risks. The substance of the technical and organizational measures in light of the criteria set out in Article 32 GDPR must be examined and the investigation must not be confined to how the data controller aimed to comply with Article 32 GDPR.

The interpretation of non-material damages and compensation as relied upon by the CJEU is supported by the Ӧsterreichische Post AG case, where the CJEU stated that the concept of damage has to be interpreted broadly. The national courts must ensure that the fear over the misuse of personal data is not unfounded and that is related to the specific circumstances at issue with the data subject.

Does your organization have questions about appropriate technical and organizational measures? Contact us, the Experts in Data Privacy at info@dpoconsultancy.nl for assistance.

Tuesday 19 Dec - 11:57 AM

EDPB’s Urgent Binding Decision Regarding META

Regarding the decision adopted on 27 October 2023 concerning the processing of personal data with the reason of behavioral advertising on the legal grounds of contract and legitimate interest within the European Economic Area (“EEA”), the Irish Data Protection Authority adopted its final decision.

The decision prohibits the processing of personal data with the reason of behavioral advertising on the legal grounds of contract and legitimate interest. Following this decision, the Norwegian Data Protection Authority requested the European Data Protection Board (“EDPB”) to order measures concerning the entire EEA.

The chair of EDPB argued that the decision has been made concerning Irish DPA to prohibit the processing in the entire EEA. He also highlighted that based on the decision in December 2022, the legal basis of contract cannot be justified for the processing carried out by Meta for behavioral advertising. It has been also recalled that Meta did not demonstrate compliance with this decision, which brings out the question of the applicability of Article 66 GDPR, urgency procedure.

In the middle of this year, the Norwegian DPA temporarily prohibited the processing of personal data for Norwegian data subjects by Facebook Norway for behavioral advertising with the basis of contract and legal interest under Article 66 GDPR. This ban was limited both in scope and geographic area; with a duration of three months and being applicable only in Norway. Following this, in September, the Norwegian DPA requested EDPB to publish an urgent binding decision regarding the adaptation of final measures applicable to the entire EEA.

With the continuous concerns regarding the infringement of GDPR, EDBP concluded that there is an urgent need to act since the rights and freedoms of data subjects are at risk. After the examination of the evidence provided, EDPB established that there is an ongoing infringement of Article 6(1), highlighting the inappropriate use of the legal grounds of contract and legitimate interest for behavioral advertising by Meta Ireland.

Since this is a matter of urgency, EDPB concluded that the regular cooperation mechanisms cannot be applied and therefore a final measure needs to be ordered urgently, concerning the possible risks and irreparable harm.

In addition, Irish DPA did not answer the request for mutual assistance from the Norwegian DPA, for which the timeframe is set for in the GDPR. Article 61(8) refers to the presumption to urgency which makes the application of GDPR clear, as well as highlights the need to derogate from regular cooperation mechanisms.

EDPB ordered that the final measures needed to be complied with by the Irish DPA. In conclusion, the EDPB found out that imposing a ban on processing of personal data for behavioral advertising on the grounds of contract and legitimate purpose, by Meta Ireland, is proportionate, necessary and appropriate.

This urgent binding decision was addressed to the Irish DPA, the Norwegian DPA and the other concerned DPAs.

Do you have any questions about developments within privacy and data protection? Contact us, the Experts in Data Privacy, at info@dpoconsultancy.nl for more information.

https://edpb.europa.eu/news/news/2023/edpb-publishes-urgent-binding-decision-regarding-meta_en

Tuesday 12 Dec - 3:22 PM

How much can a DPA fine a Controller who is Part of a Group of Companies?

The European Court of Justice (CJEU) has clarified the conditions under which a national supervisory authority (DPA) may fine one or more controllers for violations of the GDPR. In particular, it points out that the imposition of such fines is predicated on illegal activity. In other words, the violation must be committed intentionally or negligently. Furthermore, if the recipient of the fine is part of a corporate group, the calculation of the fine must be based on the turnover of the group as a whole.

Judgments of the Court in Cases C-683/21 | Nacionalinis visuomenės sveikatos centras and C-807/21 | Deutsche Wohnen

A Lithuanian court and a German court sought the CJEU’s advice regarding the possibility for national DPAs to issue fines on the data controllers that infringe the regulation provisions.

In the Lithuanian case, the National Public Health Centre under the Ministry of Health contested a fine of € 12 000 imposed on it in the context of the creation, with the assistance of a private undertaking, of a mobile application for registering and monitoring the data of persons exposed to Covid-19.

In the German case, a real estate company and its group of undertakings (which held approximately 166,000 buildings) contested a fine of over € 14 million. The fine was imposed because the company stored the personal data of tenants for longer than necessary.

The CJEU decision

The Court holds that a data controller should not receive an administrative fine unless it is proved that the infringement was committed wrongfully, that is to say, intentionally or negligently. According to the CJEU, this happens when the controller could not have been unaware of the infringing nature of its conduct, regardless of whether or not it was aware of the infringement. In particular, it is not necessary that:

- the infringement was committed by the controller’s management body

- the management body was aware of the infringement

On the contrary, if the data controller is a legal person, it is liable for infringements committed by:

- its representatives, directors, or managers

- any other person acting in the course of the business of that legal person and on its behalf (it is irrelevant if the infringement was committed by an identified natural person)

- a processor performing data processing activities on its behalf

Finally, the Court clarifies that Joint-Controllership arises solely from the fact that those entities have participated in the determination of the purposes and means of processing. There is then no need for a formal arrangement between the entities in question. A common decision, or converging decisions, are sufficient. However, when there is a Joint Controllership, the parties involved must determine their respective responsibilities by means of an arrangement between them.

Final observation

About the calculation of the fine where the addressee is or forms part of an undertaking, the Court states that the competent DPA must:

- take into account the undertaking under competition law

- calculate the fine on the basis of a percentage of the total worldwide annual turnover of the undertaking concerned, taken as a whole, in the preceding business year.

Source: https://curia.europa.eu/jcms/upload/docs/application/pdf/2023-12/cp230184en.pdf

Thursday 30 Nov - 8:25 AM

Suffer a ransomware attack and a fine by the competent DPA? Sometimes it happens!

Ransomware is a malicious software designed to block access to a computer system until a sum of money is paid. However, some forms of ransomware allow deeper penetration into the internal resources of the targeted entity, and may allow the attackers to:

- steal sensitive data, or

- view sensitive information before encrypting it selectively.

These circumstances imply unlawful access to personal data, in other words, a data breach. Depending on the circumstances, the data breach should be reported to the competent DPA and/or to the data subjects involved. Notwithstanding the fact that the entity that suffered the ransom attack is the victim, sometimes the DPA can decide to further punish the entity with a fine. This is what the Italian DPA did regarding a local health board.

The Lack of Privacy by Design measures that led to the Data Breach

The ransomware attack consisted of a virus that blocked access to the board’s database and a ransom request to restore the database functions. In particular, the attack put in jeopardy the personal data of almost a million data subjects (842.118). After it suffered a ransomware attack, the local health board diligently reported the data breach to the DPA which immediately launched a full-scale investigation. The investigation led to the detection of the following critical issues:

- lack of privacy by design measures (i.e. failure to implement adequate measures to ensure the security of the internal networks, both in relation to their segmentation and segregation)

- the absence of a detailed Data Breach Policy

- poor technical and organizational security measures in place (I.e. the local health board’s VPN authentication procedure involved only a single-factor authentication by username and password)

In particular, the lack of segmentation of the internal networks allowed the virus to spread into the whole network from the first point of entrance.

After the Ransomware and the Implementation of New Security Measures… the Fine!

After the breach, the local health board, among others:

- acted to mitigate the damages suffered by the data subjects

- implemented new technical and organizational security measures (i.e.: a new VPN authentication procedure accessible through username and password)

Notwithstanding all these new measures, due to the previous extensive lack of security measures that led to the data breach the Italian DPA issued a fine of 30.000 euro.

In conclusion, implementing the correct technical and organizational security measures and a detailed Data Breach Policy is important both to prevent data breaches and DPA fines! If you want to learn more about these and other measures to protect personal data, please feel free to contact us via info@dpoconsultancy.nl for further information.

Tuesday 21 Nov - 1:32 PM

Fine of 30,000 Euros for Municipality of Voorschoten

The Dutch Data Protection Authority (Autoriteit Persoonsgegevens – AP) has imposed a fine of 30,000 euros on the municipality of Voorschoten. The reason behind this penalty is the municipality’s prolonged retention of information about waste from individual households, along with inadequate communication to residents.

In 2018 and 2019, the municipality of Voorschoten replaced household bins and underground containers for apartments. These containers and tokens for the underground containers contain a chip with a unique number linked to a residential address. The aim is to encourage more segregated waste disposal by limiting the amount of residual waste residents can dispose of.

Excessive Retention of “Dumping Data”

If a household offers a bin more frequently than once a week, the garbage truck refuses to empty it. Alternatively, access to an underground container is blocked for the rest of the day after disposing of 5 bags of residual waste in a single day. These systems require some time to have access to the “dumping data” of the respective household.

While this is part of the public task of the municipality, the issue arose when the municipality retained the data for too long. The data from bins was stored for as long as they were in use, and data from tokens were kept for 5 years. This duration was far longer than necessary to check if a household exceeded the allowed amount.

Inadequate Information to Residents

Furthermore, the municipality did not properly inform its residents. Although the municipality sent letters about the new containers and tokens, they were not sufficiently clear about the use of personal data in waste collection.

Cessation of Violations

Both violations have now been addressed. The municipality has reduced the retention period to 14 days, and residents have received a new letter, which the municipality first submitted to the AP.

Does your organization have any questions about data privacy? Contact us, the Experts in Data Privacy at info@dpoconsultancy.nl for assistance, we are happy to help you!

Source: https://autoriteitpersoonsgegevens.nl/actueel/boete-van-30000-euro-voor-gemeente-voorschoten

Tuesday 14 Nov - 8:42 AM

Employees Geolocation and Video Surveillance, is it always Illicit?

Illicit employees geolocation and video surveillance: a French tale from CNIL

From September to October 2023, the French DPA (CNIL) has been dealing with illicit employee geolocation and video surveillance. Overall, CNIL fined both private and public-sector entities for a total amount of 97,000 euros. In particular, the fined entities contravened:

- The data minimization principle because of geolocation and continuous video surveillance of employees;

- The duty to inform employees about the processing carried out and its purposes;

- The obligation to respect the rights of individuals, and in particular to respond to a request for objection

Employee geolocation and video surveillance are not always illicit

When it comes to employee surveillance it is important to consider the imbalance of power between the employer and the employees. In particular, article 88 GDPR encourages Member States to:

- Protect the rights and freedom of the employees when their personal data are processed by the employer

- Safeguard the employees’ human dignity, legitimate interests, and fundamental rights when monitoring systems are deployed at the workplace

Employee geolocation

Regarding the monitoring of the employees’ geolocation, the main issues addressed by the CNIL were the following:

- the continuous recording of geolocation data

- the impossibility for employees to stop or suspend the tracking system during break times

However, the French DPA also stated that these activities do not always result in illicit activities subjected to sanctions. CNIL reaffirmed the principle that if there is a special justification, such activities can be carried out without causing an excessive infringement of employees’ freedom and their right to privacy.

Employee video surveillance

About the video surveillance of employees, the main issue was the implementation of video surveillance systems that constantly film employees at their workstations, without any particular reason. In deciding against the employers, the French DPA stated that the prevention of accidents in the workplace and the gathering of evidence do not per se justify the surveillance measures.

However, the DPA conclusions do not exclude employee surveillance measures, as long as:

- the employer sets out a clear and reasonable purpose for implementing the measure

- the measures are not disproportionate to the aims pursued

What to do to implement employee geolocation and video surveillance

By an analysis of the CNIL’s decisions, it emerges that there is room for employers to implement employee geolocation and video surveillance. Companies may need these measures for very different reasons:

- prevent misconduct or improve the company’s security

- prevent accidents and employee’s security

- optimize resources and reduce costs

However, choosing a legitimate purpose and proportionate measures is not sufficient.

Does your organization have any questions about employee surveillance or already have such measures in place, but have not conducted PIAs or DPIAs? Contact us, the Experts in Data Privacy at info@dpoconsultancy.nl for assistance, we are happy to help you!

Wednesday 8 Nov - 8:00 AM

GDPR certification is no longer unimaginable!

GDPR certification is no longer unimaginable!

General Data Protection Regulation (GDPR) certification is in the process of being established. The Dutch Supervisory Authority (DPA) has recently approved criteria that, when met, enable institutions and organizations to issue official GDPR certificates. As such, official GDPR certificates no longer unimaginable! The approval from the Dutch Supervisory Authority allows the company Brand Compliance to move forward with their application to the Council for Accreditation (RvA). Upon accreditation from the RvA, Brand Compliance will be officially authorized to issue GDPR certificates.

Regarding the GDPR certificates, this is a relatively recent tool in the realm of personal data protection oversight. It serves as a documented confirmation that a product, process or service’s handling of personal data complies with specific requirements outlined in the GDPR. With a GDPR certificate, organizations can demonstrate to their intended audience that they handle and safeguard personal data with care. It is important to note that obtaining a GDPR certificate is not mandatory.

When it comes to accreditation, certification bodies must adhere to legal requirements. Institutions seeking the authority to issue GDPR certificates must undergo an assessment to evaluate their compliance with these requirements, a process known officially as accreditation. After an initial assessment by the RvA, the DPA reviews whether the criteria align with the relevant requirements of the GDPR. Once approved by the DPA, the RvA is responsible for accrediting the institution. The DPA itself does not grant accreditation to certification bodies. As such, if you wish to become a certification body authorized to issue GDPR certificates, you must submit an application for the accreditation to the RvA.

The GDPR certificate is a very welcomed element within the privacy sphere. Though it is not mandatory, it is strongly advised. It establishes a greater form of trust between the organization and its clients and demonstrates that the organization considers privacy to be a top priority.

AVG-certificering komt stap dichterbij | Autoriteit Persoonsgegevens

Thursday 2 Nov - 10:55 AM

Ban on Personalized Advertising on Facebook and Instagram

Meta must cease the unlawful provision of personalized advertisements on Facebook and Instagram within Europe. This determination has been made by European privacy regulators, including the Dutch Data Protection Authority (Autoriteit Persoonsgegevens or AP), through the European Data Protection Board (EDPB).

Aleid Wolfsen, the chair of the AP and vice-chair of the EDPB, stated: “Meta tracks what you post, click on, or like on Facebook and Instagram and uses that information for offering personalized ads. Unlawfully processing the personal information of millions of people on Facebook is a revenue model for Meta. By putting an end to this, people’s privacy is better protected.”

Processing Ban for Meta

The ban on offering personalized ads is a consequence of an expedited procedure initiated within the EDPB with support from the Norwegian data protection authority and the AP. Following Norway’s prior findings, this ban is now applicable throughout Europe.

The EDPB has directed the Irish data protection authority, the lead supervisory body, to take definitive actions against Meta Ireland within a two-week period. This directive stems from the EDPB’s view that the Irish authority has not acted promptly enough.

No Valid Legal Basis

The EDPB has found that Meta engages in the unlawful processing of personal data from Facebook and Instagram users, including details like users’ locations, ages, education, and their online activity. Meta builds user profiles from this information, which advertisers find valuable, contributing to Meta’s revenue.

According to the EDPB, Meta lacks a valid legal basis for handling this personal data. The benefits claimed by Meta do not provide sufficient justification for processing users’ personal data, and users are often unaware that they are essentially trading their personal data for personalized ads when engaging with Meta.

Does your organization have any questions about privacy and advertising? Contact us, the Experts in Data Privacy at info@dpoconsultancy.nl for assistance.

Tuesday 31 Oct - 8:49 AM

Dutch Data Protection Authority to receive more funding for cookies and online tracking

Normal 0 false false false en-NL X-NONE X-NONE

The Autoriteit Persoonsgegevens (“AP”), also known as the Dutch Data Protection Authority, will receive €500,000 extra funding in the coming years that must be used specifically to monitor cookies and online tracking.

In a letter to the House of Representatives, the State Secretary wrote that the Dutch Data Protection Authority will be awarded the amount of € 500, 000 per year commencing in 2024 and will be provided for 2025 and 2026.

This budget is in addition to the extra budget that was promised to the Dutch Data Protection Authority on Prinsjesdag in September this year. While the Dutch Data Protection Authority already supervises this from a General Data Protection Regulation (“GDPR”) perspective, the Dutch government has long believed that the independent regulator should pay more attention to cookies and online tracking.

The extra funding is valid until 2027; thereafter, the Dutch Data Protection Authority will receive a structural budget of €350,000 intended for publishing guidance and developing tools to make investigations easier. In doing so, the Dutch Data Protection Authority will engage and cooperate with other regulators, such as the Autoriteit Consument en Markt.

This is the second time that funding has been made available to the Dutch Data Protection Authority for an explicit task. The first time concerned the supervision of algorithms.

Considering that cookies will be receiving more attention by Supervisory Authorities and the European Economic Data Protection Board (“EDPB”), it is imperative that organizations ensure that if using cookies, it done in a compliant manner.

Does your organization have any questions about cookies? Contact us, the Experts in Data Privacy at info@dpoconsultancy.nl for assistance.

Sources:

https://tweakers.net/nieuws/214052/ap-krijgt-half-miljoen-euro-extra-budget-voor-toezicht-op-cookies-en-tracking.html

https://open.overheid.nl/documenten/4835821b-a892-4ec5-8cce-878f0644703e/file

Thursday 26 Oct - 12:59 PM

Notes of Caution on DPF certified companies

What does it mean that a company is Data Privacy Framework certified?

In July 2023, the European Commission adopted a decision to enact the EU-US Data Privacy Framework (DPF). After this decision, US companies can become DPF-certified. This decision is voluntary and a company may well decide not to be certified. This means that each company will decide to become DPF certified depending on its:

- type of business operations

- risk appetite

- global privacy program

It is important to remember that in data processing activity which involves an international data transfer of personal data, a DPF-certified company may well be:

- a data controller

- a joint-controller

- a data processor

Regardless of the role, after the full entry into force of Data Privacy Framework, DPF-certified companies are mandated to provide an adequate level of protection for personal data received from (or sent to) according to the GDPR provisions. Therefore, the key advantage is that the legal basis for the international transfer is equivalent to an adequacy decision.

However, being DPF-certified is not a free-for-all situation for international data transfers concerning the US because:

- the certification might not cover all the products and/or services of the certified company

- a Data Processing Agreement (DPA) is still required according to Article 28 GDPR.

What does it mean that a company is not DPF-certified?

It is important to remember that there is no adequacy decision in place for the US as a country but only for US companies that are DPF-certified. This means that if a company is not DPF-certified the legal basis for the international data transfer cannot be an adequacy decision, but instead:

- Standard Contractual Clauses (SSCs) – Article 46 GDPR

- Binding Corporate Rules, if applicable – Article 47 GDPR

- Derogations for specific situations – Article 49 GDPR

Moreover, a Transfer Impact Assessment (TIA) would be required.

Final considerations and two notes of caution